What the Proofs Assume

The Assumptions

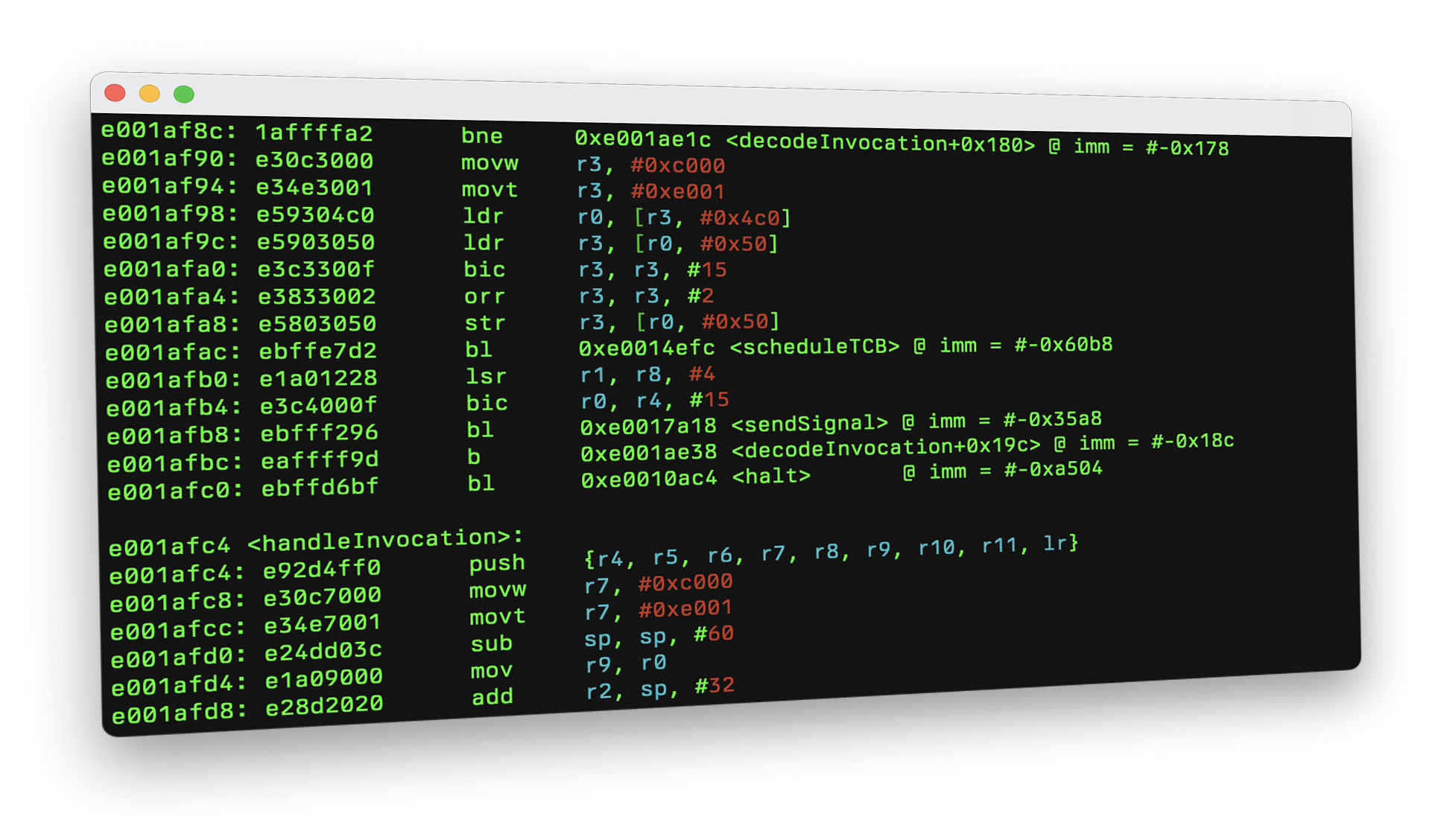

With a proof in formal logic, it is important to understand what its basic assumptions are, because this is where fault can still occur. Our proof about the seL4 microkernel goes down to the level of the C code, and for some architectures even to the binary code.

Assumptions are not limitations or problems. Being able to clearly state an exhaustive list of assumptions means that the work needed to fully trust a system is massively reduced from looking at many thousands of lines of code to a number of small, specific, and easy to understand pieces. They are the following:

- Assembly: the seL4 kernel, like all operating system kernels, contains some assembly code, about 340 lines of ARM assembly in our case, and a similar amount for other architectures. For seL4, this concerns mainly entry to and exit from the kernel, as well as direct hardware accesses. For the proof, we assume this code is correct.

- Hardware: we assume the hardware works correctly. In practice, this means the hardware is assumed not to be tampered with, and working according to specification. It also means, it must be run within its operating conditions.

- Hardware management: the proof makes only the most minimal assumptions on the underlying hardware. It abstracts from cache consistency, cache colouring and TLB (translation lookaside buffer) management. The proof assumes these functions are implemented correctly in the assembly layer mentioned above and that the hardware works as advertised. The proof also assumes that especially these three hardware management functions do not have any effect on the behaviour of the kernel. This is true if they are used correctly. The proofs state these machine interface assumptions explicitly in formal logic.

- Boot code: the proof currently is about the operation of the kernel after it has been loaded correctly into memory and brought into a consistent, minimal initial state. This leaves out about 1,200 lines of the code base that a kernel programmer would usually consider to be part of the kernel.

- Virtual memory: under the standard of 'normal' formal verification projects, virtual memory does not need to be considered an assumption of this proof. However, the degree of assurance is lower than for other parts of our proof where we reason from first principle. In more detail, virtual memory is the hardware mechanism that the kernel uses to protect itself from user programs and user programs from each other. This part is fully verified. However, virtual memory introduces a complication, because it can affect how the kernel itself accesses memory. Our execution model assumes a certain standard behaviour of memory while the kernel executes, and we justify this assumption by proving the necessary conditions on kernel behaviour. The thing is: you have to trust us that we got all necessary conditions and that we got them right. Our machine-checked proof doesn't force us to be complete at this point. In short, in this part of the proof, unlike the other parts, the proof is not from first principles and there is potential for human error.

- DMA: we assume that the CPU and MMU are the only devices that access memory directly. The proof can correctly ignore memory-mapped registers of devices, but has to assume that DMA devices are either not present or do not misbehave, for instance by overwriting the kernel. In practise this means, that while normal user-level drivers cannot break kernel security, drivers for DMA enabled devices can and must be formally verified for the proof to carry over. Note that user-level drivers will generally be given access to memory-mapped hardware registers, and on some architectures writing to read-only hardware registers may generate failures that could halt the system. Such drivers must be verified or or wrapped by a verified component that validates such accesses to satisfy the proof assumptions. Check out the FAQ entry on DMA for what this means in practice.

- Information side-channels: this assumption applies to the confidentiality proof only and is not present for functional correctness or integrity. The assumption is that the binary-level model of the hardware captures all relevant information channels. We know this not to be the case. This is not a problem for the validity of the confidentiality proof, but means that its conclusion (that secrets do not leak) holds only for the channels visible in the model. This is a standard situation in information flow proofs: they can never be absolute. As mentioned above, in practice the proof covers all in-kernel storage channels but does not cover timing channels

Note that we do not need to trust the compiler and linker any more on architectures that are supported by our binary verification. Their output is formally verified for seL4 by an automatic tool if the kernel is compiled with the correct compiler settings that can be configured in the build system.

What do these assumptions mean?

The reduced proof assumptions mean that we do not need to trust the compiler or linker, but there may still be faults remaining in specific low-level parts of the kernel (TLB, cache handling, handwritten assembly, boot code). These parts are thoroughly manually reviewed.

These specific assumptions are not a limitation of the general formal verification approach. In theory, it is possible to eliminate all of them: there are at least two research groups that have demonstrated successful formal verification of assembly code and low-level hardware management functions and we have ourselves proved an earlier version of the boot code correct down to the level of a precise, executable specification. There are still significant scientific challenges in unifying all of these into a single framework, but it is clear at this point that it can be done.

With all the purity and strength of mathematical proof it is easy to get carried away. There is a fundamental theoretical limit of formal verification and other verification techniques such as testing: there will always be some bottom level of assumptions about the physical world left and these assumptions have to be validated by other means. Mathematical proof is proof as long as it talks about formal concepts. It is when assumptions connect to reality where things may still fail. Albert Einstein is quoted as saying "As far as the laws of mathematics refer to reality, they are not certain; and as far as they are certain, they do not refer to reality." For instance, if the hardware is faulty, or if a cosmic ray randomly changes memory, the correctness predictions of our proof do not apply. Neither do any traditional tests or verification methods help against cosmic rays, but it is important to keep in mind that even with mathematical proof, there are no absolute guarantees about the physical world.

There are two other assumptions that we do not include in the list above, because they are of a different kind:

- We assume the axioms of higher-order logic are logically consistent.

- We assume our prover checks this particular proof correctly.

The first is a fundamental question of formal logic. If this is not true, mathematics in general has a much bigger problem than one verified OS kernel. The second is more interesting, but equally unlikely to be false.

From security properties down to C code, we use the proof assistant Isabelle/HOL. This prover belongs to the so-called LCF family of provers and is engineered to minimise the code that needs to trusted for the proof to be correct. It also supports external proof checking by a separate small checking tool. There is no absolute guarantee that the proof is correct, but in general humans are good at creating proofs, computers are very good at checking them. It is an easy problem for computers. If you are worried about the proof, be worried about the assumptions in the first part. They are much more likely to cause problems.

From C code to binary code, we employ a set of automated widely-used verification tools. These are: SONOLAR, Z3, Isabelle/HOL, and HOL4. While Z3 and SONOLAR do not provide the same level of assurance as Isabelle/HOL and HOL4. The combination of these tools still achieves a very high standard of assurance for this last verification step.